Capitalism wastes money chasing new shiny tech thing

Yeah, we know. AI’s not special.

And I was always taught that capitalism allocates the resources ideally. /s

The market is rational, that’s why casinos have so many customers!

*Probably typed on a smartphone, one of the most technology-dense products ever created by humanity, currently used by over half of humanity.

This approach has never worked but I admire your devotion to it.

It might be in the volume and price of projects

you can’t spell fail without AI.

feɪl

phayl

Human creativity for the win!

Thank you for using IPA instead of other cheap beers.

I saw a dell bill board the other day saying they put the Ai in ipa and it had a picture of a laptop and a beer

Wait, are they saying that if you remove them (ai) then you’re just left with P? That’s kinda funny

Фэил

I think the whole system of venture capital might be garbage. We have bros spending millions of dollars like gif sharing while the oceans boil, our schools rot, and our infrastructure rusts or is sold off. Or, I guess I’m just indicting capitalism more generally. But having a few bros decide what to fund based on gutfeel and powerpoints seems like a particularly malignant form.

You think it might be??

Bro say that shit with some confidence.

Venture capital does not contribute beneficially to society.

Say it with your whole chest and both feet. Cuz it’s true.

Venture Capital is probably the best way to drain the billionaires. Those billions in capital weren’t wasted, that money just went to pay people who do actual work for a living. What good is all that money doing just sitting in some hedge fund account?

I don’t think it’s the best way out of all possible options. Even if it does “create jobs”, a lot of those jobs aren’t producing much of wider value, and most of the wealth stays in the hands of the ownership class. And a lot of the jobs are exploitive, like how “gig workers” are often treated.

Changes to tax law and enforcing anti-trust stuff would probably be more effective. We probably shouldn’t have bogus high finance shenanigans either. We definitely shouldn’t have billionaires.

Oh sure, I was mostly being flippant. My response to the article is basically that billionaires losing billions is a good thing. I don’t feel optimistic enough to say we’ll get around to taxing them but yes, that would be ideal.

The world is burning and the rich know this so they are desperate to multiply their money and secure their luxury survival bunkers, which is why they are gambling harder.

Oh yeah I think I read about Zucker building a bunker in hawaii. Hopefully he dies before he can enjoy it.

It’s not just fuckerberg, EVERY billionaire is doing it and desperately pumping their billionaire friends for tips and suggestions on things like ‘keeping guards loyal for multiple generations’, and ‘what commodities to hoard for trading after the collapse’.

One of the sites I used to support was a high-end automation service, normally for factory equipment and biotech but pivoted to luxury home automation (no IoT devices, all site hosted with aerospace grade equipment), and they have been running at 100% for the last seven years deploying to ultra wealthy residential estates where the location is not disclosed.

The wealthy are expecting us to rise up within the next decade and a half, and I think they’re probably right.

Isn’t it good that the money is being put into circulation instead of being hoarded? I’m all in for the wealthy wasting their money.

The problem is the bulk of it is going to Nvidia.

Well probably not just Nvidia but the next likely beneficiaries are in the same range (Microsoft etc.)

The money goes to Microsoft/Google/Amazon/etc, which they goes to Nvidia.

Yeah, the brightest minds instead of building useful tech to fight climate change, spend their life building vanity AI projects. Computational resources instead of folding proteins or whatever are wasted on some gradient descent of some useless model.

All while working class wages are stagnant. And so your best career advice is to go get a random tech degree so you could also work on vanity stuff and make money.

This is cryptocurrency equivalent. It’s worse than CEOs buying yachts. The latter actually leads to some innovation.

Succesfully creating an actual AGI would be by far the biggest and most significant invention in the human history so I can’t blame them for trying.

A bunch of people fine-tuning an off-the-shelf model on a proprietary task only to fail horrendously will never lead to any progress, let alone AGI.

So, nobody is trying AGI.

If all those people would actually collectively work on a large-scale research project, we’d see humanity advance. But that’s exactly my point.

“Nobody is trying AGI” is simply just not true. If you think what they’re doing will never lead to AGI, then that’s an opinion you’re free to have, but it’s still just that; an opinion. Our current LLM’s are by far the closest resemblance of AGI that we’ve ever seen. That route may very well be a dead end but it may also not be. You can’t know that.

Oh gosh, look, an AI believer.

No, LLM will not lead to AGI. But even if they did, applying existing tech to a new problem only to fail cuz you’re dumb at estimating the complexity does not, in fact, improve the underlying technology.

To paraphrase in a historical context: no matter how many people run around with shovels digging the ground for something, it will never lead to an invention of the excavator.

Ad hominem and circular reasoning isn’t a valid counter-argument. You’re not even attempting to convince me otherwise, you’re just being a jerk.

I’m an AI Engineer, been doing this for a long time. I’ve seen plenty of projects that stagnate, wither and get abandoned. I agree with the top 5 in this article, but I might change the priority sequence.

Five leading root causes of the failure of AI projects were identified

- First, industry stakeholders often misunderstand — or miscommunicate — what problem needs to be solved using AI.

- Second, many AI projects fail because the organization lacks the necessary data to adequately train an effective AI model.

- Third, in some cases, AI projects fail because the organization focuses more on using the latest and greatest technology than on solving real problems for their intended users.

- Fourth, organizations might not have adequate infrastructure to manage their data and deploy completed AI models, which increases the likelihood of project failure.

- Finally, in some cases, AI projects fail because the technology is applied to problems that are too difficult for AI to solve.

4 & 2 —>1. IF they even have enough data to train an effective model, most organizations have no clue how to handle the sheer variety, volume, velocity, and veracity of the big data that AI needs. It’s a specialized engineering discipline to handle that (data engineer). Let alone how to deploy and manage the infra that models need—also a specialized discipline has emerged to handle that aspect (ML engineer). Often they sit at the same desk.

1 & 5 —> 2: stakeholders seem to want AI to be a boil-the-ocean solution. They want it to do everything and be awesome at it. What they often don’t realize is that AI can be a really awesome specialist tool, that really sucks on testing scenarios that it hasn’t been trained on. Transfer learning is a thing but that requires fine tuning and additional training. Huge models like LLMs are starting to bridge this somewhat, but at the expense of the really sharp specialization. So without a really clear understanding of what can be done with AI really well, and perhaps more importantly, what problems are a poor fit for AI solutions, of course they’ll be destined to fail.

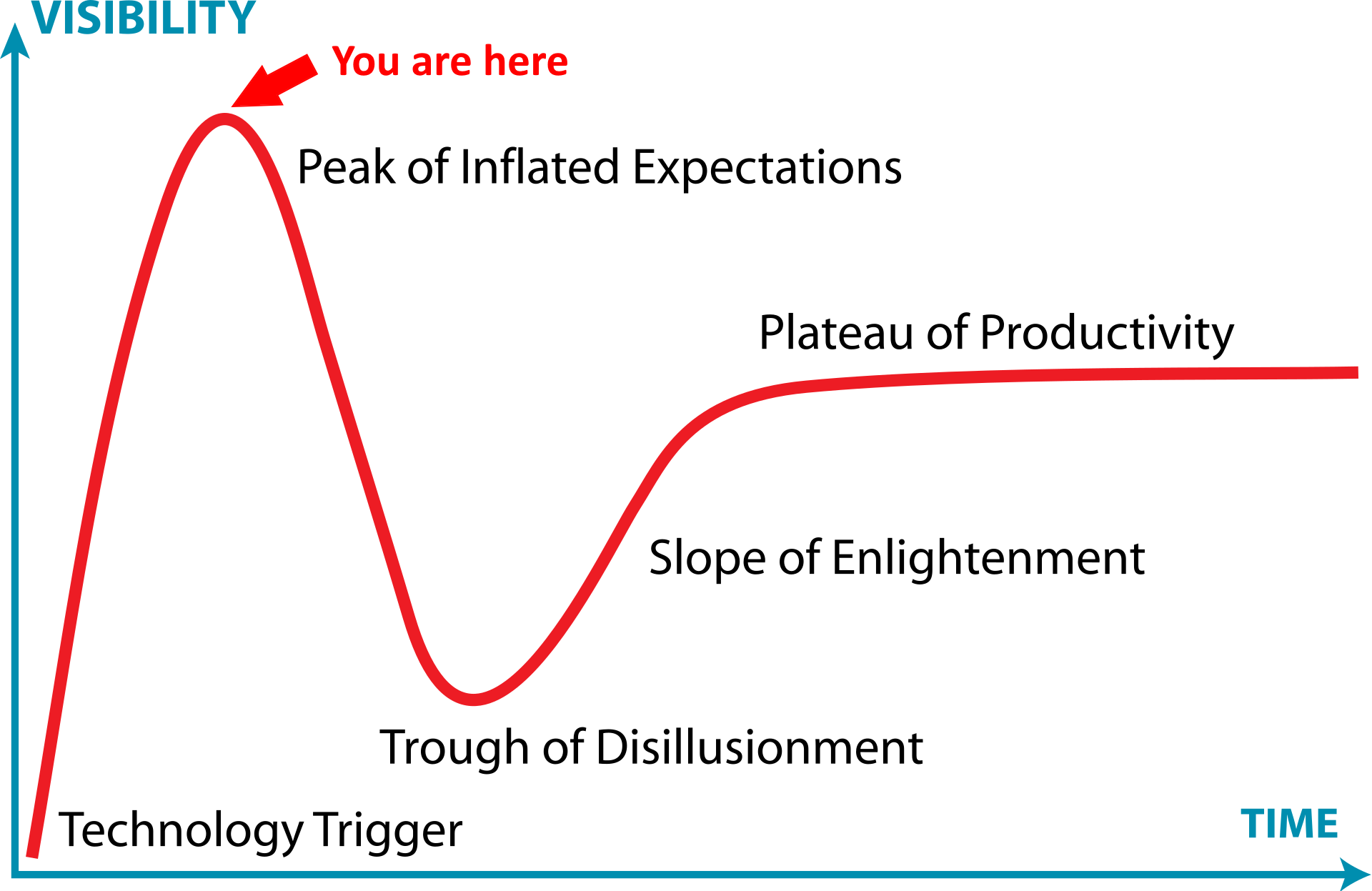

3 —> 3: This isn’t a problem with just AI. It’s all shiny new tech. Standard Gardner hype cycle stuff. Remember how they were saying we’d have crypto-refrigerators back in 2016?

Re 1, 3 and 5, maybe it is upon the AI projects to stop providing shiny solutions looking for a problem they could solve, and properly engaging with potential customers and stakeholders to get a clear understanding of the problems that need solving.

This was precisely the context of a conversation I had at work yesterday. Some of our product managers attended a conference that was rife with AI stuff, and a customer rep actually took to the stage and said ‘I have no need for any of that because none of it helps me solve the problems I need to solve.’

I don’t disagree. Solutions finding problems is not the optimal path—but it is a path that pushes the envelope of tech forward, and a lot of these shiny techs do eventually find homes and good problems to solve and become part of a quiver.

But I will always advocate to start with the customer and work backwards from there to arrive at the simplest engineered solution. Sometimes that’s a ML model. Sometimes a ln expert system. Sometimes a simpler heuristics/rules based system. That all falls under the ‘AI’ umbrella, by the way. :D

Not to derail, but may I ask how did you become an AI Engineer? I’m a software dev by trade, but it feels like a hard field to get into even if I start training for the AI part of it, because I’d need the data to practice =(

But it’s such a big buzz word I feel like I need to start looking that direction if i want to stay employed.

For me it helps to have a project. I learned SciKit in order to analyze trading data. I was focusing on crypto but there’s lots of trading data available in general. I didn’t make any money or anything but I learned some basic techniques, etc. It was fun to learn more about data processing, statistics, and modeling with functions.

(FWIW I’m crypto-neutral depending on the topic and anti-“AI” because it doesn’t exist.)

if I want to stay employed

I think this is a little paranoid. Somebody has to handle the production models - deploying them to servers, maintaining the servers, developing the APIs and front ends that provide access to the models… I don’t think software dev jobs are going anywhere

Also in the industry and I gotta say it’s not often I agree with every damn point. You nailed it. Thanks for posting!

AI is a ponzi scheme to relieve stupid venture capitalists of their money.

To be fair, a large fraction of software projects fail. AI is probably worse because there’s probably little notion of how AI actually applied to the problem so that execution is hampered from the start.

https://www.nbcnews.com/id/wbna27190518

https://www.zdnet.com/article/study-68-percent-of-it-projects-fail/

The interviews revealed that data scientists sometimes get distracted by the latest developments in AI and implement them in their projects without looking at the value that it will deliver.

At least part of this is due to resume-oriented development.

Most people don’t want to pay for AI. So they are building stuff that costs a lot for a market that is not willing to pay for it. It is mostly a gimmick for most people.

And like, it’s not even a good gimmick. It’s a serious labour issue because the primary intent behind a lot of AI has always been to just phase out workers.

I’m all for ending work through technological advancement and universal income, but this definitely wasn’t going to get us that, so…

Well, why would I support something that mostly just threatens people’s livelihoods and gives even more power to the 0.1%?

Exactly. I have used quite a few products and my thoughts have been. That’s cool, but when would I ever need this? The few useful usecases I have for it could use a small local model for very specific purposes and that’s it. Not make them billions of dollars level of usefulness.

Is that better or worse than IT and software projects in general? It sounds like it might be better.

From the article - “which is twice the failure rate for non-AI technology-related startups.”

I guess I should a) read the article and b) have a slightly better outlook of the field I’m in.

Here’s a fitting AI generated Porky

I’ve been reading a book about Elizabeth Holmes and the Theranos scam, and the parallels with Gen AI seem pretty astounding. Gen AI is known to be so buggy the industry even created a euphemistic term so they wouldn’t have to call it buggy: Hallucinations.

When did brute force switch from being an antipattern to the preferred pattern?

When normies angry at feeling stupid came into equation.

All those (stubbornly) computer-illiterate people - have you tried really irritating them? What they’ll say at those moments is valuable.

They will say that computing can’t be that complex. That reports on company’s activity for a year are complex, that hydroelectric stations are complex, that car engines are complex. That history of art is complex, that fashion is complex, that relationships are complex. That politics is complex, that economy is complex, that law is complex.

But computing is not, it’s just hard to do for normal people. All those freaks doing it have just built the whole industry for their own convenience. They are all openly or secretly autistic.

And now it’s too expensive to switch from their freakish computing to computing understandable for normals.

We just have to break their resistance or outsmart them or bribe some of them so that they’d remake computing the normal way. But we can’t do that immediately. It takes a lot of money, but the end result is worth it.

/s

This was also the reason for normies being so hell-bent on using social networks and other new shiny as opposed to what existed before them in 00s Web. Or for them being so excited about Apple. EDIT: Precisely because we, in their opinion people holding the bottleneck, collecting the Sound Toll, privileged group, some freaks, autistic children playing toys whom they can’t accept as being smarter, were advising against those.

And now they are being literally promised the computer that works without programmers! And it even does some tricks to show them it works!

If any normies are reading this, don’t feel insulted, it’s only about the dumbest of you, and also it’s not that harsh, you haven’t seen what medical professionals write about normies.

I am a well educated person who uses these forums and many others with regularity and I have many opinions on tech after working in both marketing and the tech sector for a long time.

That out of the way, I will simply skip over any comment that says “normies” unironically. Especially over and over.

This isn’t fucking 4chan, communicate like a human like the rest of us. You don’t get out of being one of us. I don’t even know your take because it’s so distracting and immature and condescending.

This guy gets it.

Incel lingo is fundamentally disgusting in so many ways.What does the word “normie”, which is a derivative of “normal”, have to do with incels, who are a subculture of unlikable people calling themselves “involuntarily celibate” (which can’t be true if there are at least two incels near each other)?

It doesn’t matter where it came from, if you’re steeped in this kind of language it’s a massive signpost that you’ve handicapped your own intellectual abilities in a profound way. Healthy, normal people with regulated feelings and stable perspectives grounded in reality do not frequent the communities that use this kind of language.

It’s a red flag that will always make the outside world laugh and reject what you have to say, and if your instinct is to retreat back into the places that use this language, you are going to absolutely SUFFER in life, this is a warning coming from a place of compassion, you HAVE to believe me.

I use all kinds of language when it fits my meaning.

I don’t think anything of what you said makes sense in this situation.

Do you honestly think your choice of words in this post led to you being heard and understood? Or do you think that everyone is just being mean bullies? THiiiiiiink hard about this one.

I stopped at “normies”. Lose the ego and grow up if you want people to listen to your opinions.

Removed by mod

As one of those weird autists who make computing too hard who’s been using Apple products for decades I really wonder where I fit in.

Removed by mod

Oh, I have no illusions that I’m smarter than other people at their chosen profession. Hell, I’m an idiot at my chosen profession quite often.

Though I do wish people would stop calling me a “miracle worker” and “wizard” because I can get the wifi working.

As someone tired of this shit you fit in just fine, I’m only approaching that stage but can clearly feel it.

Welcome to AI:

The hype-cycle is the exception, not the norm. Very commonly stuff just ends up dying.

Wasting?

A bunch of rich guy’s money going to other people, enriching some of the recipients, in hopes of making the rich guy even richer? And the point of AI is to eliminate jobs that cost rich people money?

I’m all for more foolish AI failed investments.

It makes rich guys even richer. At the expense of other rich guys and just fools attracted.

It’s a circle jerk, don’t get fooled into thinking this is some new version of trickle down economics