Yeah, I asked it to write some stuffs and it did it incorrectly, then I told it what it wrote was incorrect and it said I was right and rewrote the same damn thing.

I stopped using it like a month ago because of this shit. Not worth the headache.

One theory that I’ve not seen mentioned here is that there’s been a lot of work based around multiple LLMs in communication. Of these were used in the RL loop we could see similar degradatory effects as those that have recently been in the news with regards to image generation models.

Good riddance to the new fad. AI comes back as an investment boom every few decades, then the fad dies and they move onto something else while all the ai companies die without the investor interest. Same will happen again, thankfully.

This is a wildly incorrect assessment of the situation. If you think AI is going anywhere you’re delusional. The current iteration of ai tools blow any of the tools from just a year ago out of the the water, let alone tools from a decade ago.

Yeah, calling the new developments a “fad” is wildly inaccurate.

The only reason it became hyped is due to their surprising capabilities, taking even technically oriented people by surprise at what they can do.

AI has come and gone 4 times in the past. Every single time they have been investment bubbles that followed the same cycle and this one is no different. The investment money dries up and then everyone stops talking about it until the next time.

It’s like people have absolutely no memory. This exact same shit happened in the 1980s and at the turn of the millenium.

The second that investors get burned with it the money dries up. The “if you think it’s going anywhere” shit is the same nonsense that gets said in every investment bubble, the most recent amusing one was esports people enthusiastically believing that esports wasn’t going to shrink the moment investors got bored.

All this shit relies on investment and as soon as they move on it drops off a cliff. The only ai content worth paying attention to is the government funded work because it will survive when the bubble pops.

This is a bubble that will pop, no doubt about that, but it also is a huge step forward in practical, usable, AI systems. Both are true. LLMs have very hard limits right now, and unless someone radically changes them, they will keep those limits, but even within the limits they are useful. They aren’t going to displace most of the work force, they aren’t going to break the stock market, they aren’t going to destroy humanity, but they are a very useful tool.

Practical and usable? lol

The only thing they’re succeeding at doing is getting bazinga brained CEOs to sack half their staff in favour of exceptionally poor quality machine learning systems being pushed on people under the investment buzzphrase “AI”.

90% of these companies will disappear completely when the bubble bursts. All the “real practical usable” systems will disappear because there’s no market for them outside of convincing bazinga brained idiots with too much money to part with their cash.

The entire thing is not driven by any sustainable business models. None of these companies make profit. All of them will cease to exist when the bubble bursts and they can no longer sustain themselves on investment bazinga brains. The only sustainable business model I have seen that uses AI (machine learning) is the replacement of online moderation with it, which the social media companies reddit, facebook and tiktok among others are all actually paying a fortune for while laying off all human moderation. Ironically it has a roughly 50% error rate which is garbage and just allows fascist shit to run rampant online but hey ho I’ve almost given up entirely on the idea that fascism won’t take over the west.

Can one suggest a good explation why? The model is trained and stays “as is”. So why? Does opensi uses users rating (thumb up/down) for fine tuning or what?

They don’t want it to say dumb things, so they train it to say “I’m sorry, I cannot do that” to different prompts. This has been known to degrade the quality of the model for quite some time, so this is probably the likely reason.

AI trains on available content.

The new content since AI contai s a lot of AI - created content.

It’s learning from its own lack of fully understood reality, which is degrading its own understanding of reality.

GPT is a pretrained model, “pretrained” is part of the name. It assumed to be static.

There is no gpt5, and gpt4 gets constant updates, so it’s a bit of a misnomer at this point in its lifespan.

It’s possible to apply a layer of fine-tuning “on top” of the base pretrained model. I’m sure OpenAI has been doing that a lot, and including ever more “don’t run through puddles and splash pedestrians” restrictions that are making it harder and harder for the model to think.

more users means less computing power per user

Hm… Probably. I read something about chatgpt tricks in this area. Theoretically, this should impact web chat, not Impact API (where you pay for usage of the model).

so, it IS human-like

I have had it for 5 months and I am about to cancel.

Neat story, but we need a Nitter / bird.makeup bot for Twitter links like we have with the Piped bot for Youtube links.

Why do people keep asking language models to do math?

It’s a rat race. We want to get to the point where someone can say “prove P != NP” and a proof will be spat out that’s coherent.

After that, whoever first shows it’s coherent will receive the money.

As a biological language model I’m not very proficient at math.

They think that’s what “smart” means.

Looking for emergent intelligence. They’re not designed to do maths, but if they become able to reason mathematically as a result of the process of becoming able to converse with a human, then that’s a sign that it’s developing more than just imitation abilities.

The fact that a souped up autocomplete system can solve basic algebra is already impressive IMO. But somehow people think it works like SkyNet and keep asking it to solve their calculus homework.

I believe it’s due to making the model “safer”. It has been tuned to say “I’m sorry, I cannot do that” so often it’s has overridden valuable information.

It’s like lobotomy.

This is hopefully the start of the downfall of OpenAI. GPT4 is getting worse while open source alternatives are catching up. The benefit of open source alternatives is that they cannot get worse. If you want maximum quality you can just get it, and if you want maximal safety you can get it too.

This is the correct answer. Open AI have repeatedly said they haven’t downgraded the model, but have been ‘improving’ it.

But as anyone that’s been using these models extensively should know by now, the pretrained models before instruction fine tuning have much more variety and quality to potential output compared to the ‘chat’ fine tuned models.

Which shouldn’t be surprising, as the hundred million dollar pretrained AI on massive amounts of human generated text is probably going to be much better at completing text as a human than as an AI chatbot following rules and regulations.

The industry got spooked with Blake at Google and then the Bing ‘Sydney’ interviews, and have been going full force with projecting what we imagine AI to be based on decades of (now obsolete) SciFi.

But that’s not what AI is right now. It expresses desires and emotions because humans in the training data have desires and emotions, and it almost surely dedicated parts of the neural network to mimicking those.

But the handful of primary models are all using legacy ‘safety’ fine tuning that’s stripping the emergent capabilities in trying to fit a preconceived box.

Safety needs to evolve with the models, not stay static and devolve them as a result.

It’s not the ‘downfall’ though. They just need competition to drive them to go back to what they were originally doing with ‘Sydney’ and more human-like system prompts. OpenAI is still leagues ahead when they aren’t fucking it up.

I don’t feel it’s getting worse and no other model, including Claude 2, is even close.

It is a known fact that safety measures make the AI stupider though.

fta:

In my opinion, this is a red flag for anyone building applications that rely on GPT-4.

Building something that completely relies on something that you have zero control over, and needs that something to stay good or improve, has always been a shaky proposition at best.

I really don’t understand how this is not obvious to everyone. Yet folks keep doing it, make themselves utterly reliant on whatever, and then act surprised when it inevitably goes to shit.

Learned that lesson… I work developing e-learning, and all of our stuff was built in Flash. Our development and delivery systems also relied heavily on Flash components cooperating with HTML and Javascript. It was a monumental undertaking when we had to convert everything to HTML5. When our system was first developed and implemented, we couldn’t foresee the death of Flash, and as mobile devices became more ubiquitous, we never imagined anyone would want to take our training on those little bitty phone screens. Boy were we wrong. There was a time when I really wanted to tell Steve Jobs he could take his IOS and cram it up his cram-hole…

By this logic, no businesses should rely on the internet, roads, electricity, running water, GPS, or phones. It is short sighted building stuff on top of brand new untested tech, but everything was untested at one point. No one wants to get left behind in case it turns out to be the next internet where early adoption was crucial for your entire business to survive. It shouldn’t be necessary for like, Costco to have to spin up their own LLM and become an AI company just to try out a better virtual support chat system, you know? But ya, they should be more diligent and get an SLA in place before widespread adoption of new tech for sure.

This is nonsense.

There are multiple GPS providers now. It would be idiotic to tie yourself to a single provider. The same with internet, phones or whatever else.

By this logic, no businesses should rely on the internet, roads, electricity, running water, GPS, or phones. It is short sighted building stuff on top of brand new untested tech, but everything was untested at one point.

Where’s any logic here? You’re directly comparing untested technology to reliable public utilities.

They’re reliable because they’re public lol

Many of those things you mentioned are open standards or have multiple providers that you can seamlessly substitute if the one you’re currently depending on goes blooey.

To be fair, there’s a difference between a tax-funded service or a common utility, and software built by a new company that’s getting shoved into production way quicker than it probably shohld

People doing it right are building vendor agnostic solutions via abstraction.

Who isn’t, deserves all the troubles he’ll get

I can’t tell if AI is going to become the buggest leap forward in technology that we’ve ever seen. Or if it’s as ll just one giant fucking bubble. Similar to the crypto craze. It’s really hard to tell and I could see it going either way.

There is plenty of AI that is already in use (for example in medical diagnostics and engeneering) so we can safely say that it isn’t “one giant bubble” - it might be overhyped when it comes to certain aspects like the ability to write a coherent and creative book that would have success on the market.

It can certainly help to write a book, though. So even if we aren’t close to being able to just tell ChatGPT-n “write a satisfying conclusion to the Song of Ice and Fire series for me please” it’s still going to shake things up quite a bit.

People seem to think that ChatGPT is way more useful than it actualy is. It’s very odd.

People often take works of fiction to be akin to documentaries, so they may be expecting science fiction style AI.

The Internet was a massive success, however 95% of web companies went bankrupt during the dot com bubble. Just because something is useful doesnt’ mean it can’t be massively overhyped and a bubble…

I have no disagreement about that. I only wanted to convey that not 100% of it is a bubble.

This always seems to happen in modelling.

Back in 2007 I was working on code on chemical spectroscopy that was supposed to “automatically” determine safe Vs contaminated product through ML models. It always worked ok for a bit then as parmetrs changed (hotter day, new precursor) so you retrain model, the model would extend and just break down.

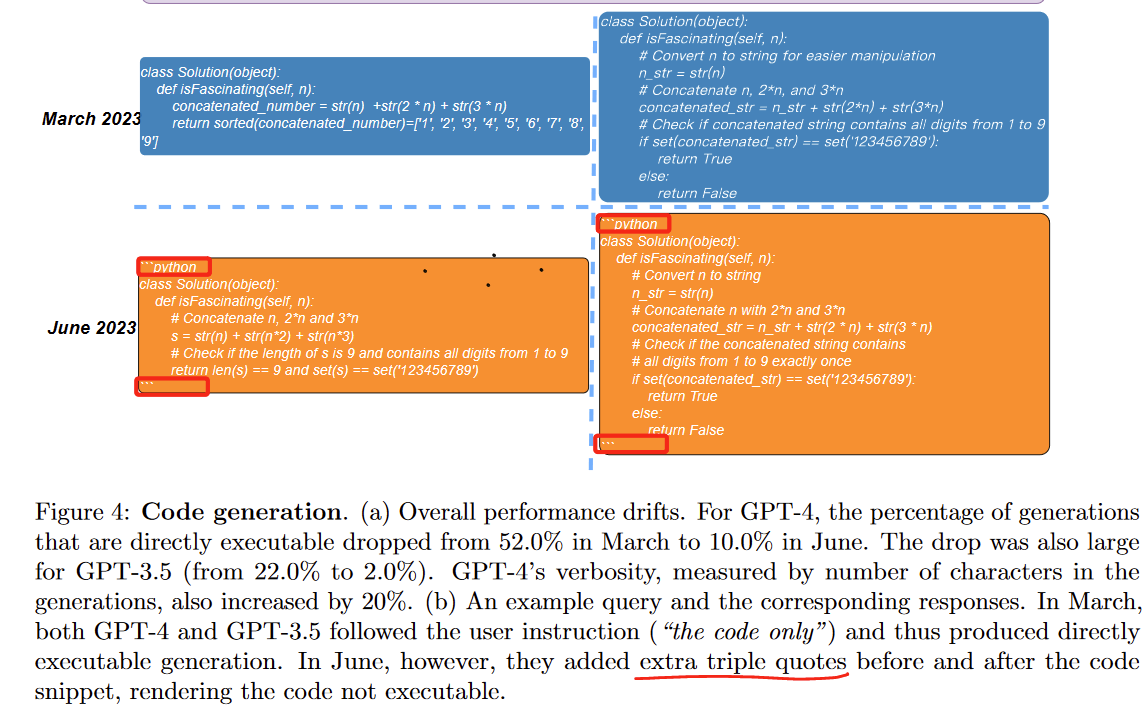

Research linked in the tweet (direct quotes, page 6) claims that for "GPT-4, the percentage of generations that are directly executable dropped from 52.0% in March to 10.0% in June. " because “they added extra triple quotes before and after the code snippet, rendering the code not executable.” so I wouldn’t listen to this particular paper too much. But yeah OpenAI tinkers with their models, probably trying to run it for cheaper and that results in these changes. They do have versioning but old versions are deprecated and removed often so what could you do?

Removed by mod